Deep Dive into the Go Runtime Scheduler

The Go runtime scheduler is a sophisticated piece of software that orchestrates the execution of goroutines. Its design draws inspiration from both operating system schedulers and user-level thread libraries, combining the best features of both. This deep dive will explore the internal workings of the Go runtime scheduler in detail, complete with practical examples.

Key Components of the Scheduler

- Goroutines (G): Goroutines are lightweight threads managed by the Go runtime. Each goroutine has its own stack, which starts small and grows as needed. This allows the Go runtime to manage thousands of goroutines efficiently.

- Processors (P): Processors are logical entities that represent the resources required to execute goroutines. The number of processors is determined by the GOMAXPROCS variable, which defaults to the number of CPU cores available.

- Machine Threads (M): Machine threads are OS threads that execute goroutines. The Go runtime maps goroutines onto these threads, but there are generally fewer threads than goroutines.

Scheduler Design and Operation

The scheduler’s design revolves around the management of these three entities (G, P, M) to ensure efficient execution of goroutines. Here’s a closer look at its operation:

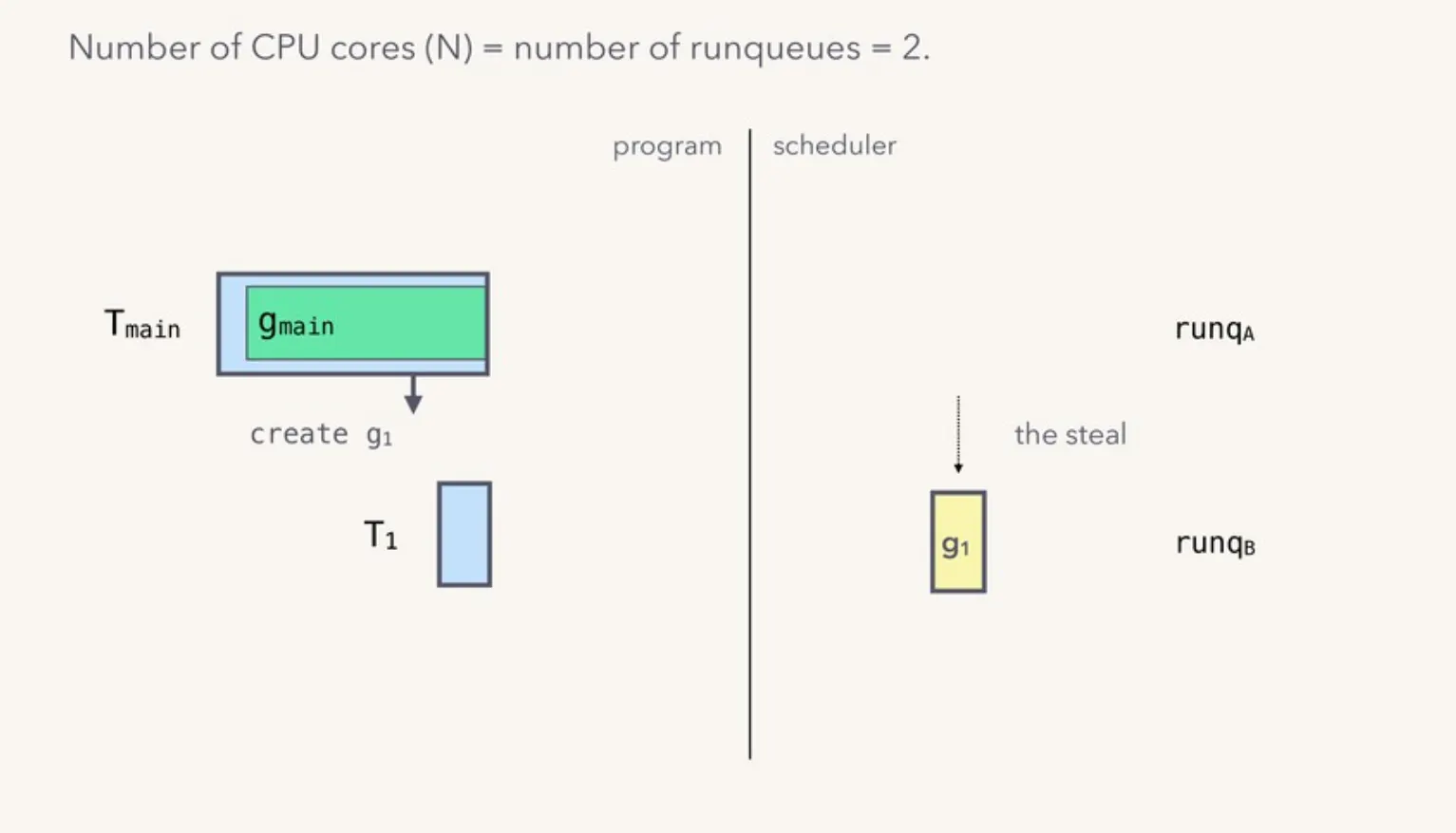

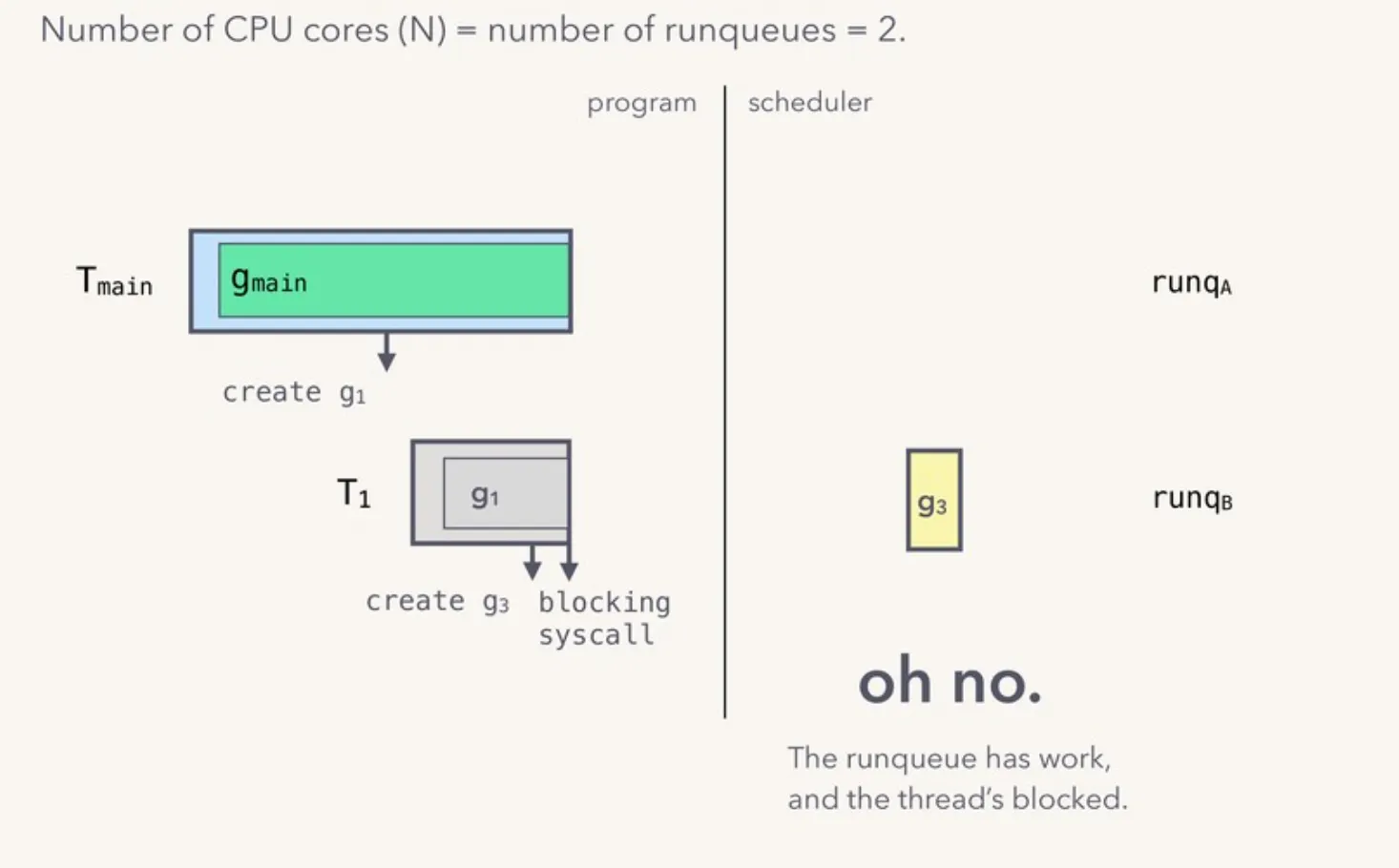

- Work Stealing: Each processor (P) maintains a local work queue of runnable goroutines. When a processor’s work queue is empty, it can steal goroutines from the work queues of other processors. This dynamic work distribution helps balance the load across multiple CPUs.

- Goroutine States: Goroutines can be in several states:

- Runnable: Ready to run but not currently executing.

- Running: Currently being executed by a machine thread.

- Waiting: Blocked, waiting for some event (e.g., I/O operation).

- Dead: Finished execution and ready for garbage collection.

- Preemption : The scheduler uses a mechanism called “asynchronous preemption” to interrupt long-running goroutines, allowing other goroutines to execute. This prevents a single goroutine from monopolizing CPU time. Preemption points are inserted into the code during compilation, and the runtime checks these points to decide whether to yield control.In general if sysmon detects a goroutine running longer than 10ms(with caveats), the goroutine is preempted and is put into a global runqueue. Since it essentially starved other goroutines from running, it would be unfair to put them back into a per-core runqueue. These global runqueues are less frequently checked by the individual cores, hence contention is not a real issue.

Example: Creating and Running Goroutines Let’s start with a basic example of creating and running goroutines:

package main

import (

"fmt"

"time"

)

func g1() {

for i := 1; i <= 5; i++ {

fmt.Println(i)

time.Sleep(100 * time.Millisecond)

}

}

func main() {

go g1() // Start a new goroutine

time.Sleep(1 * time.Second) // Give the goroutine time to run

}

Detailed Scheduler Operation

- Initialization: The scheduler initializes the GOMAXPROCS (lets say 2) number of processors (P) based on the available CPU cores or the value set by the user. It also creates an initial machine thread (M) to start execution.

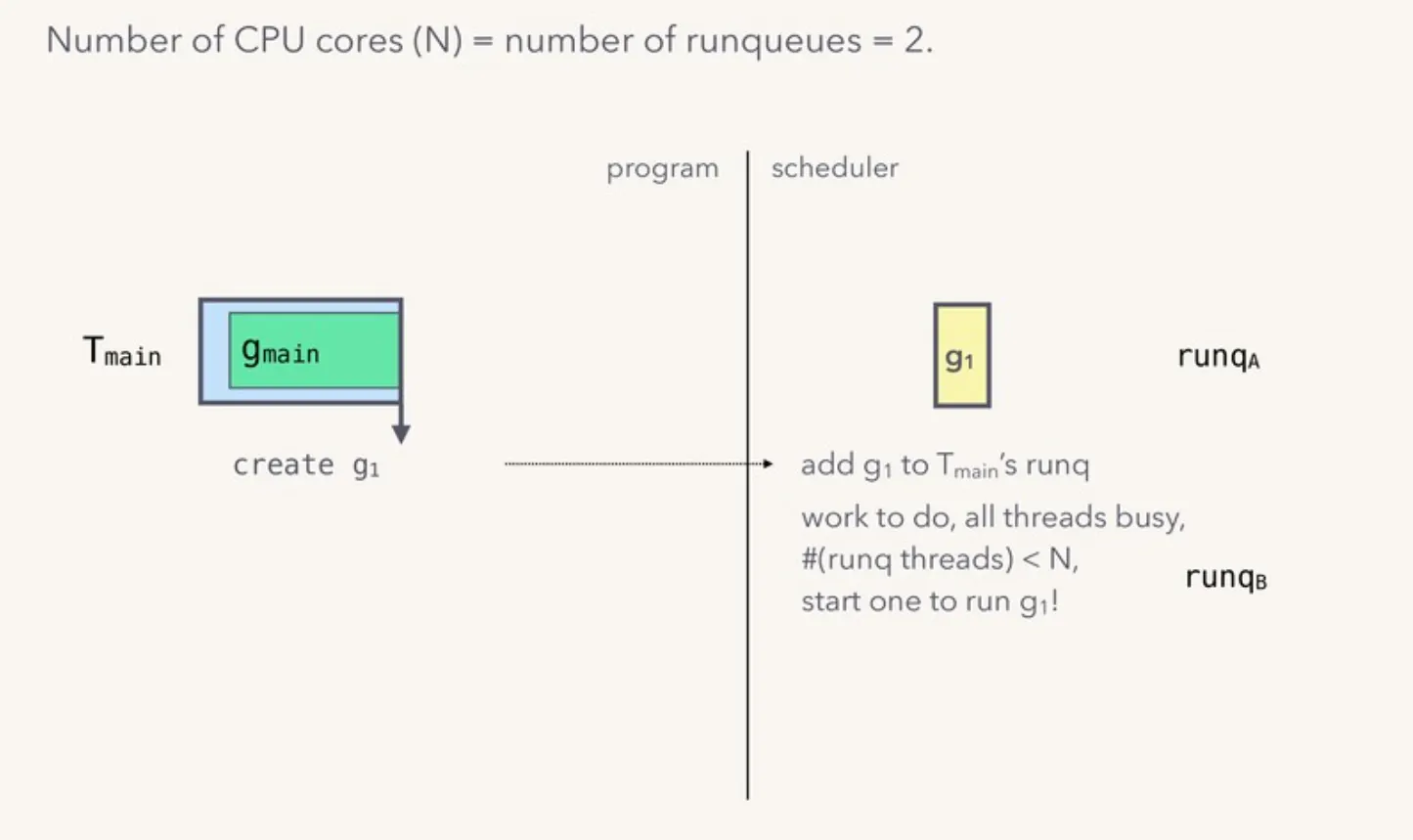

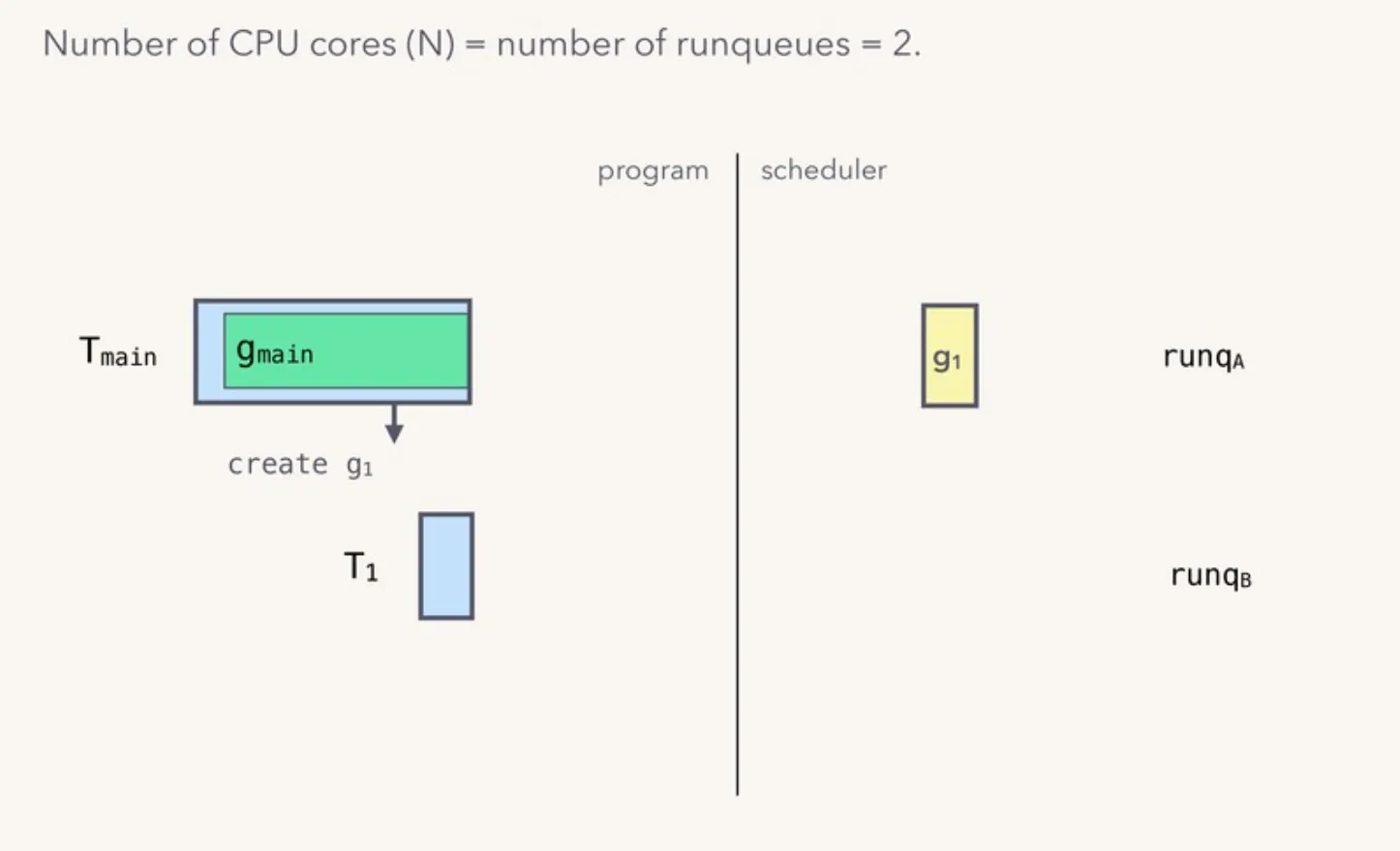

- Goroutine Creation: When a new goroutine is created using the go keyword, the runtime allocates a new goroutine (G) structure, initializes its stack, and places it in the local work queue of the current processor (P).

- Execution Loop:

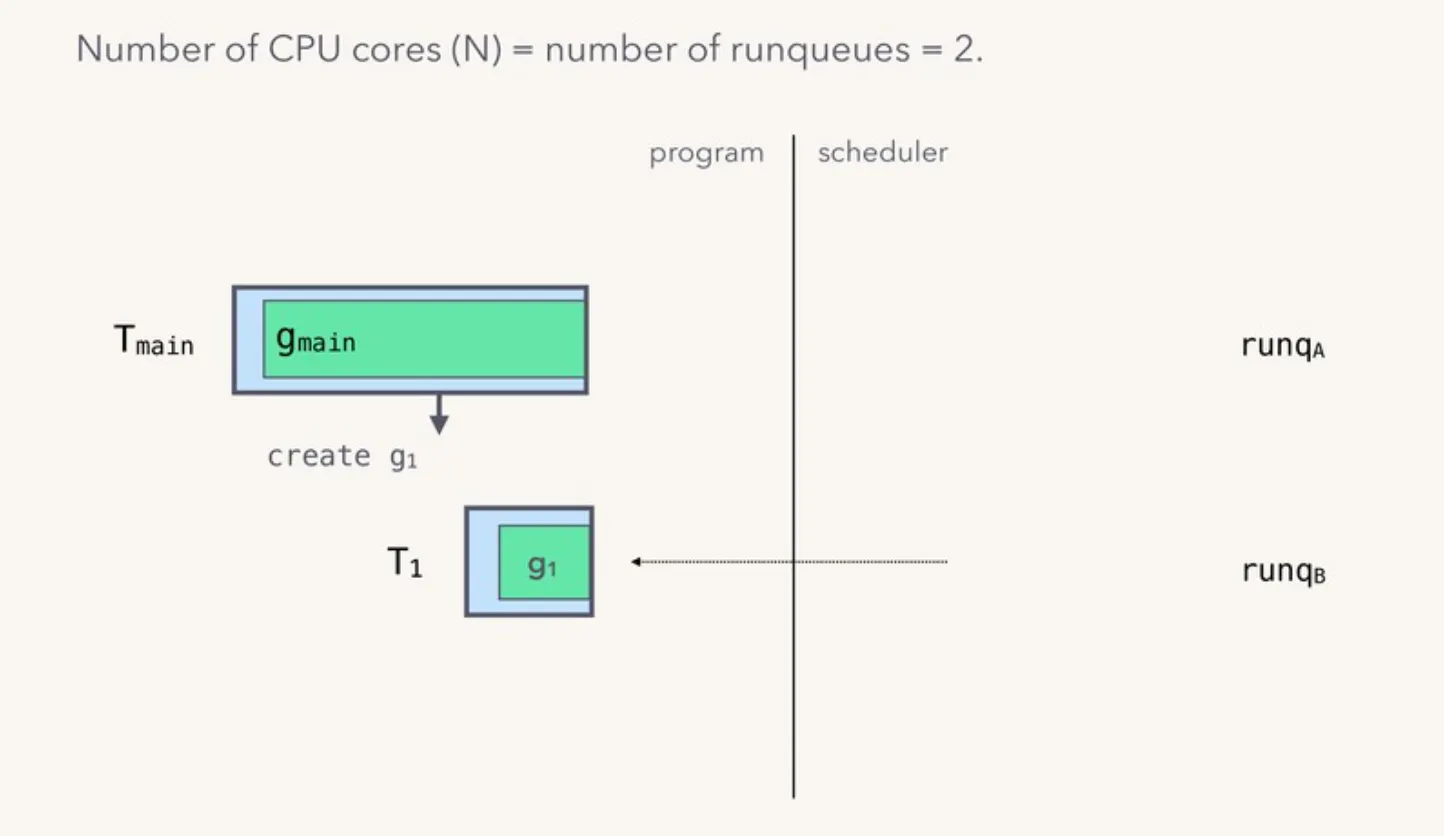

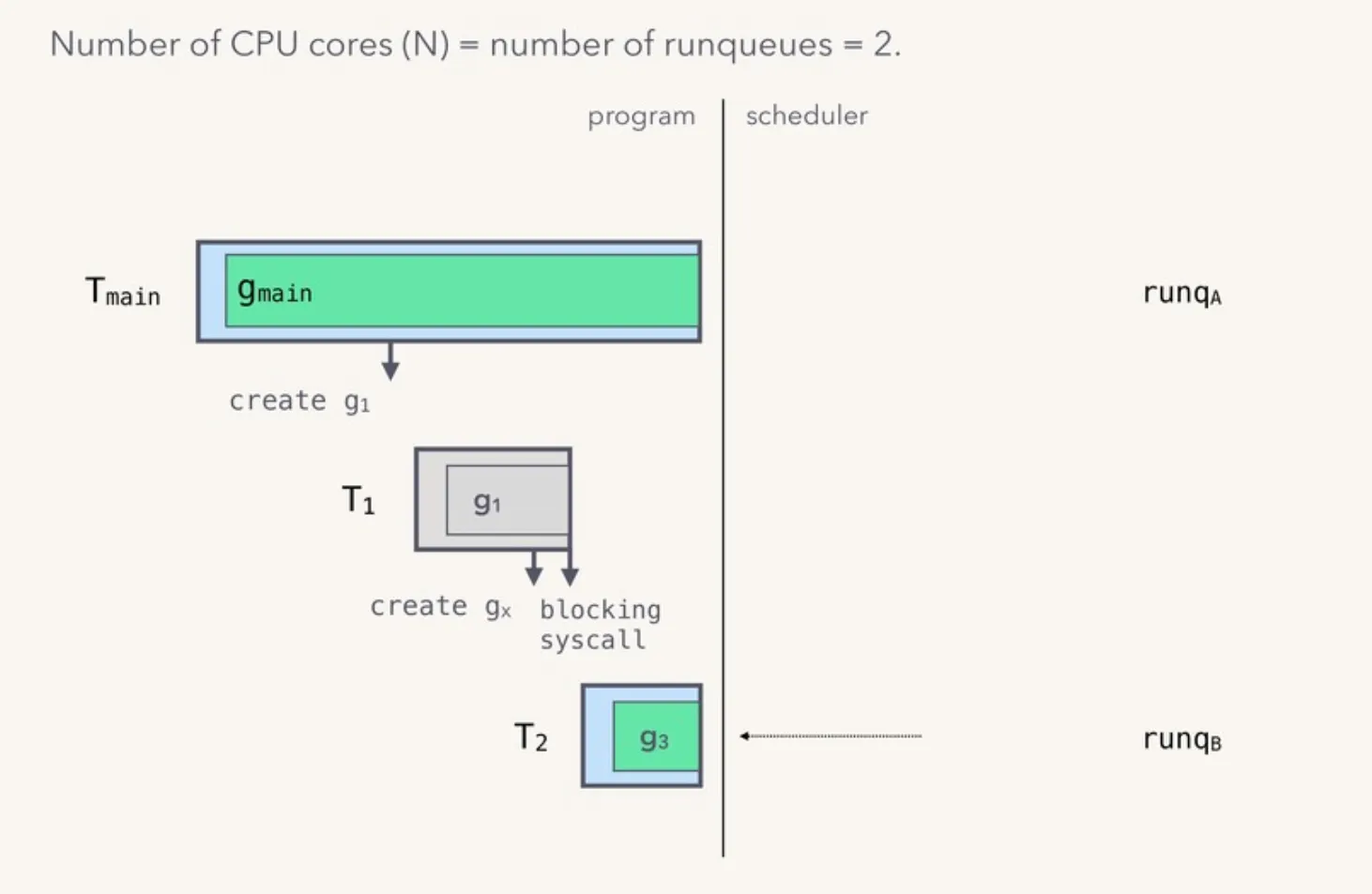

- Each machine thread (M) fetches goroutines from its associated processor’s work queue and executes them.

- If the local queue is empty, the thread attempts to steal goroutines from other processors.

- The runtime keeps track of the state of each goroutine, transitioning them between runnable, running, and waiting states as necessary.

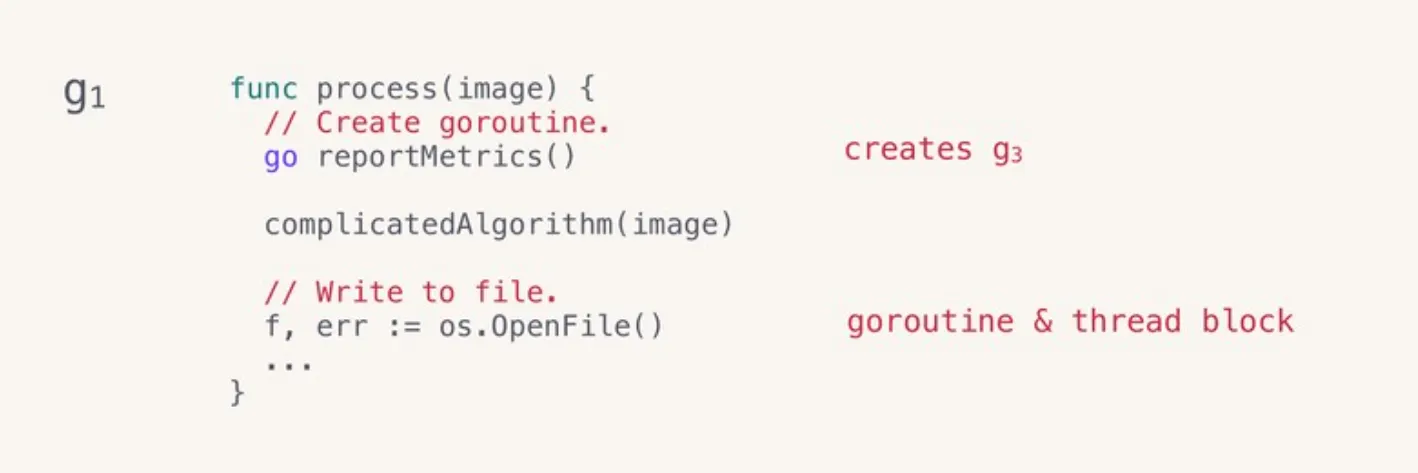

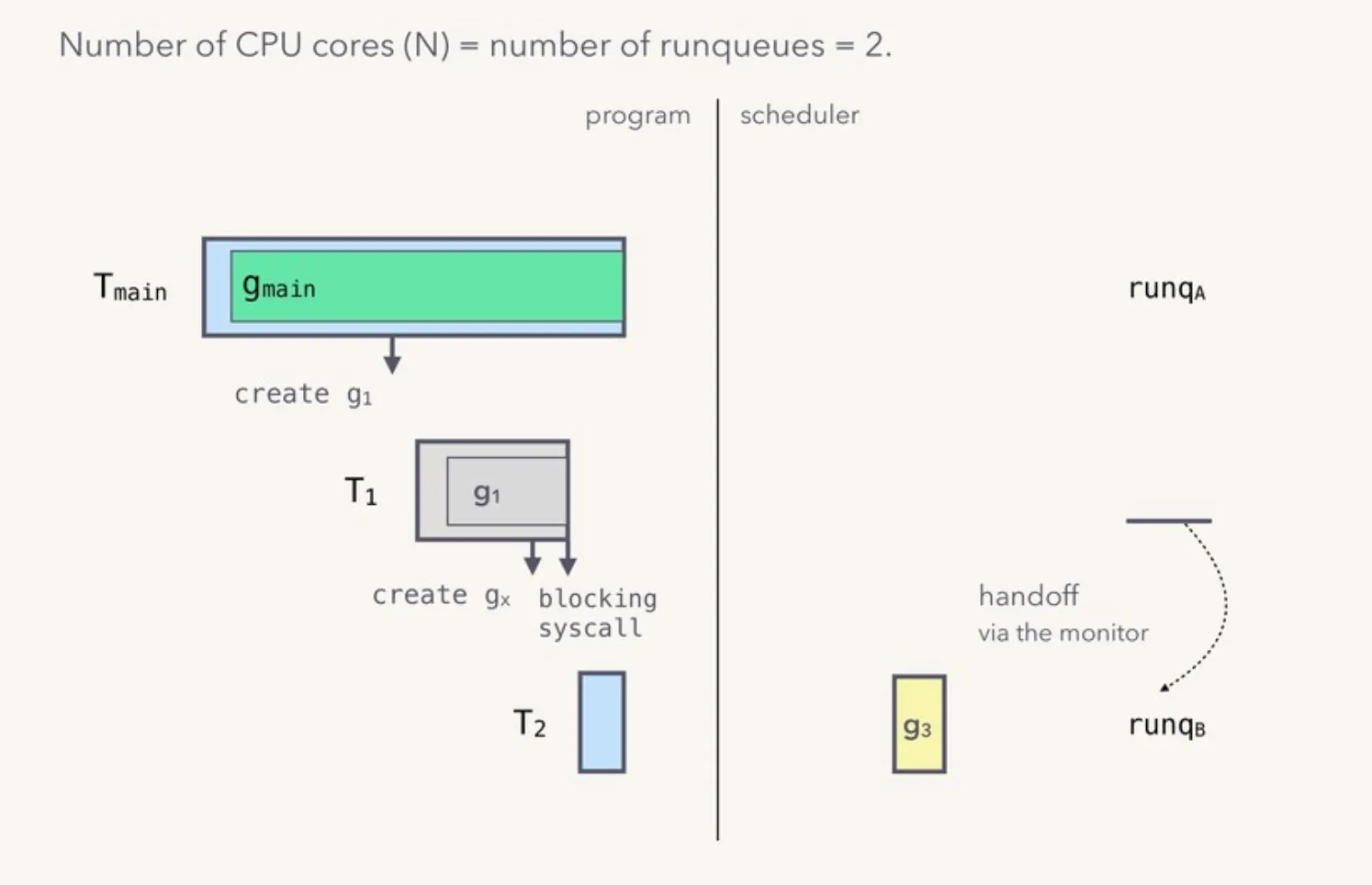

- Blocking Operations: When a goroutine performs a blocking operation (e.g., a system call or I/O), the runtime marks it as waiting and schedules another runnable goroutine. Lets look at an example to understand this better.

Advanced Features

- Spinning and Parking: To avoid excessive thread creation, the runtime uses a technique called “spinning” where idle machine threads (M) spin for a short period, hoping new work will arrive. If no work arrives, the thread is parked (blocked) and later reactivated when work becomes available.

- Sysmon: The runtime includes a system monitor (sysmon) that periodically wakes up to perform housekeeping tasks, such as preemption, monitoring goroutine states, and handling timers.

- Timers and Network Polling: The scheduler integrates with a timer system to handle timed operations efficiently. It also includes a network poller to manage network I/O, allowing goroutines to block on network operations without tying up machine threads.

Performance Considerations

- Efficient Context Switching: The lightweight nature of goroutines and the scheduler’s ability to efficiently manage them results in low overhead for context switching compared to traditional threads.

- Scalability: The work-stealing mechanism and dynamic load balancing allow Go programs to scale efficiently across multiple CPU cores, making it well-suited for concurrent applications.

- Responsiveness: Preemption and efficient handling of blocking operations ensure that Go applications remain responsive, even under heavy workloads.

Conclusion

The Go runtime scheduler is a powerful and efficient system for managing concurrency. Its design, which includes work stealing, preemption, and efficient handling of blocking operations, allows Go to support massive concurrency with minimal overhead.In summary, Go’s scheduler multiplexes a large number of gorountines onto a smaller number of OS threads.This is know as the M:N model (M goroutines are modeled onto N os threads).By understanding the internals of the scheduler, developers can better leverage its capabilities to build high-performance, scalable applications.

Thank you for exploring the intricacies of the Go runtime scheduler with me. Stay tuned for our next dive into another fascinating topic.Until then, keep pushing the boundaries of what’s possible!